Polymath Engineer Weekly #62

Click with curiosity

Hello again. This was a youtube heavy week to me. You're in luck if you are a lazy reader. Enjoy 😎

Links of the week

Watching Neural Networks Learn

Do Machine Learning Models Memorize or Generalize?

In this article we’ll examine the training dynamics of a tiny model and reverse engineer the solution it finds – and in the process provide an illustration of the exciting emerging field of mechanistic interpretability [9, 10]. While it isn’t yet clear how to apply these techniques to today’s largest models, starting small makes it easier to develop intuitions as we progress towards answering these critical questions about large language models.

If AI becomes conscious, how will we know?

Now, a group of 19 computer scientists, neuroscientists, and philosophers has come up with an approach: not a single definitive test, but a lengthy checklist of attributes that, together, could suggest but not prove an AI is conscious. In a 120-page discussion paper posted as a preprint this week, the researchers draw on theories of human consciousness to propose 14 criteria, and then apply them to existing AI architectures, including the type of model that powers ChatGPT.

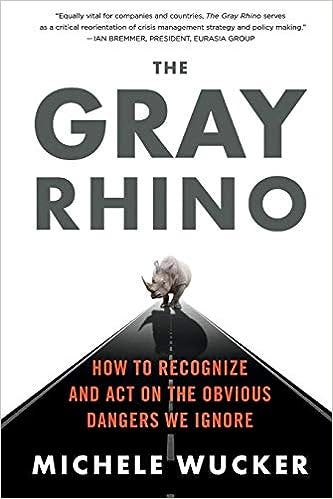

Book of the Week

The Gray Rhino: How to Recognize and Act on the Obvious Dangers We Ignore

Do you have any more links our community should read? Feel free to post them on the comments.

Have a nice week. 😉

Have you read last week's post? Check the archive.